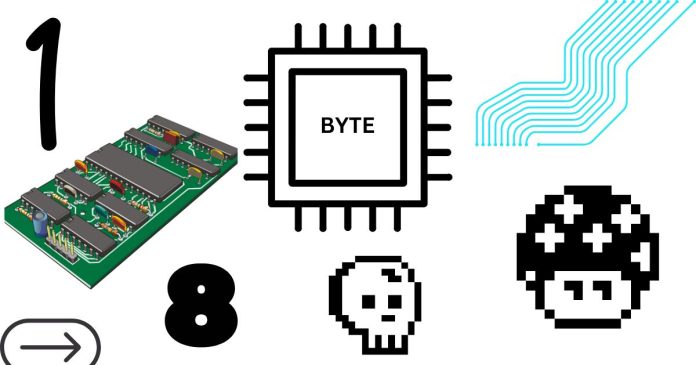

The fact that 1 byte is equal to 8 bits is a fundamental concept in computing that stems from the way data is represented and processed in digital systems. Here’s the logic behind it:

1. Binary Representation and Bits

- Bit stands for “binary digit” and is the smallest unit of data in computing. A bit can have one of two possible values:

0or1. These are called binary values, and they form the basis of all digital information.

2. Grouping Bits into Bytes

- A byte is a group of 8 bits. The reason for choosing 8 bits as a byte is primarily historical and practical:

- Memory Efficiency: It allows a manageable size for data processing, where each group of 8 bits can represent a value from

0to255(or a total of 256 unique values), which is useful in encoding a variety of data. - Character Representation: The choice of 8 bits per byte fits nicely with character encodings, such as the ASCII standard, where each character is represented by an 8-bit (1-byte) value. With 8 bits, you can represent up to 256 different characters, which is sufficient for most of the English alphabet and common symbols.

- Memory Efficiency: It allows a manageable size for data processing, where each group of 8 bits can represent a value from

3. Historical Context

- Early computers: In the early days of computing, memory and storage were very expensive and limited, so engineers standardized 8-bit bytes for a good balance between storage space, performance, and flexibility in handling data.

- The 8-bit standardization: The 8-bit byte became a de facto standard because of its suitability in encoding information and its alignment with early computer architectures (like 8-bit processors) that could handle 8-bit chunks of data at a time.

4. Why Not Other Numbers of Bits?

- Although other sizes of data units (like 6 bits, 10 bits, or 16 bits) were used historically by some machines, the 8-bit byte became the most widely adopted because it struck a good balance between being large enough to represent a variety of data (e.g., character sets, small numbers) and small enough to be easily processed by early computer hardware.

In Summary:

- 1 byte = 8 bits because 8 bits provide enough range to represent meaningful data (such as numbers, characters, and symbols) efficiently.

- Historical and practical reasons led to the 8-bit byte becoming the standard in computing, particularly as it facilitates efficient memory usage and character encoding.

This standardization of 8 bits per byte is what underpins modern computer architecture, data storage, and communication protocols.